|

Chaoxiang Ye is now a second year Ph.D candidate at the MAVLab and the BioMorphic Intelligence Lab of Delft University of Technology (TU Delft), supervised by Guido de Croon and Salua Hamaza. He received the joint M.Eng. degree from Southern University of Science and Technology (SUSTech) and Shenzhen Institute of Advanced Technology (SIAT), Chinese Academy of Science (CAS), supervised by Zhengkun Yi. He received the B.Eng. degree from Dalian University of Technology (DUT). Email / Github / Google Scholar / Linkedin |

|

|

|

|

I'm interested in Robotics, Tactile Perception and Exploration, Transfer Learning, and Multimodal Fusion. |

|

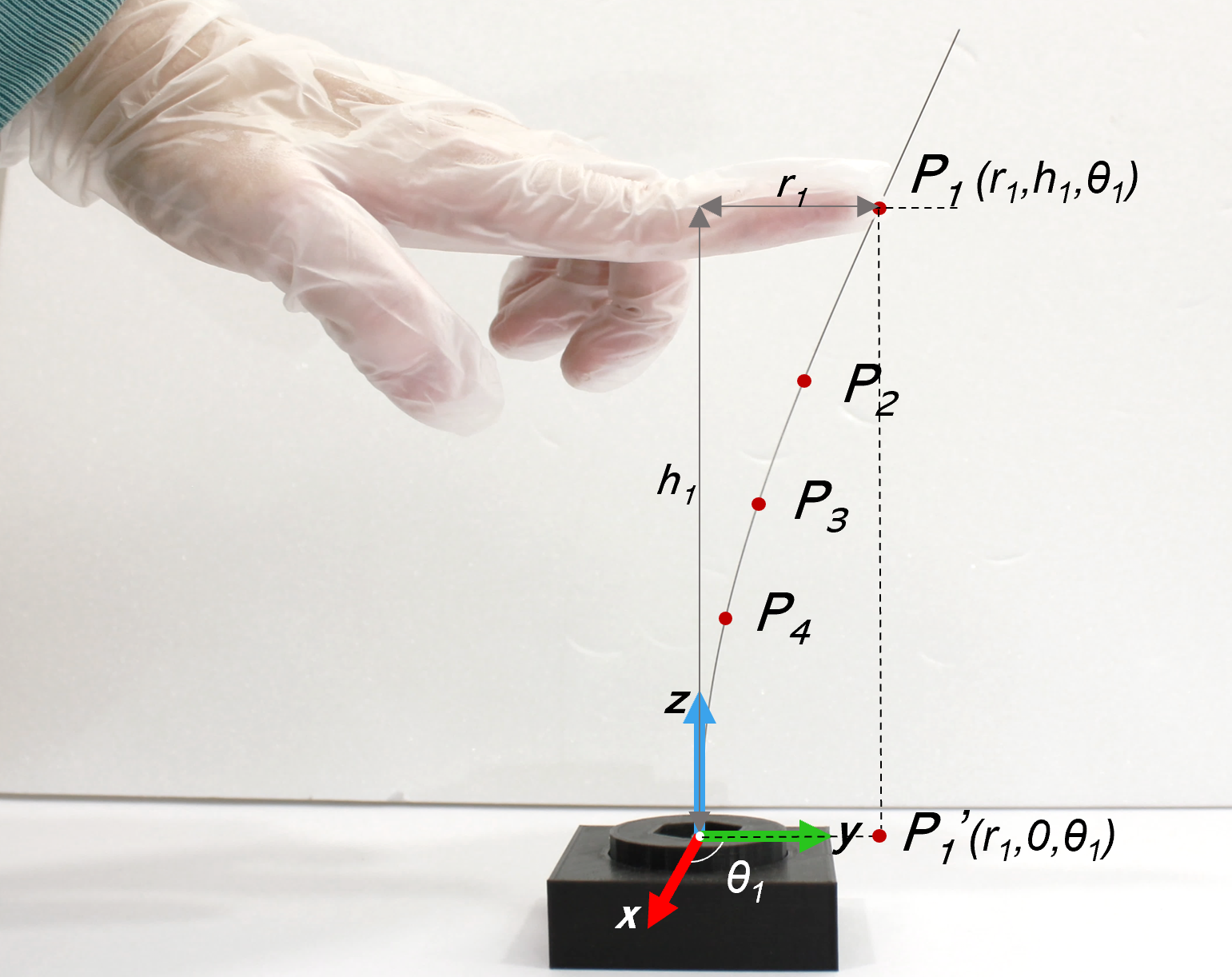

Chaoxiang Ye, Guido de Croon, and Salua Hamaza ICRA, 2024 paper, design files, dataset, and code Unmanned air vehicles (UAVs) have traditionally been considered as “eyes in the sky”, that can move in three dimensions and need to avoid any contact with their environment. On the contrary, contact should not be considered as a problem, but as an opportunity to expand the range of UAVs applications. In this paper, we designed, fabricated, and characterized a whisker sensor unit based on MEMS barometers suitable for tactile localization on UAVs, featuring lightweight, low stiffness, high sensitivity, a broad sensing range, and scalability. Then, for the challenging task of contact point localization, we propose a Recurrent Multi-output Network (RMN) for predicting 3D contact points under continuous contact conditions to address the problems of non-linearity, hysteresis, and non-injective mapping between signals and contact points by considering time series. In addition, we propose an azimuth prediction loss function which reduces the RMSE by 3.24◦ compared to L1 loss. Finally, we conduct experiments on a linear stage to validate the 3D contact point localization capability of the proposed whisker system and model. The results show that our localization can achieve excellent performance, with an inference time of 1.4 ms and a mean error of only 9.18 mm in Euclidean distance within 3D space, laying a robust foundation for future implementation of tactile localization on UAVs. |

|

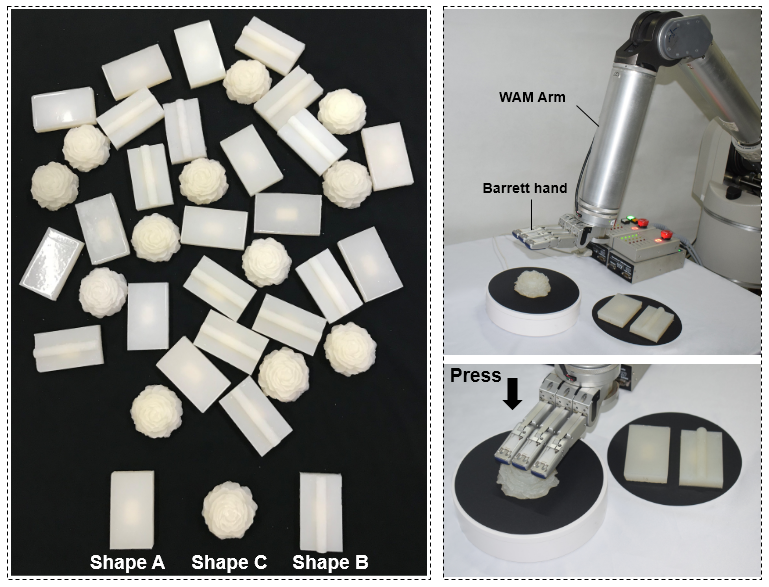

Chaoxiang Ye, Senlin Fang, Meng Yin, Xiaoyu Li, Zhengkun Yi, Xinyu Wu manuscript in preparation We propose an ordinal classification method to recognize the hardness of objects. To verify the performance of the proposed method, we use a real robot to collect a tactile hardness dataset on silicone samples of three different shapes. Experimental results show that compared with state-of-the-art methods, the proposed method achieves better performance in terms of accuracy and quadratic weighted kappa (QWK). |

|

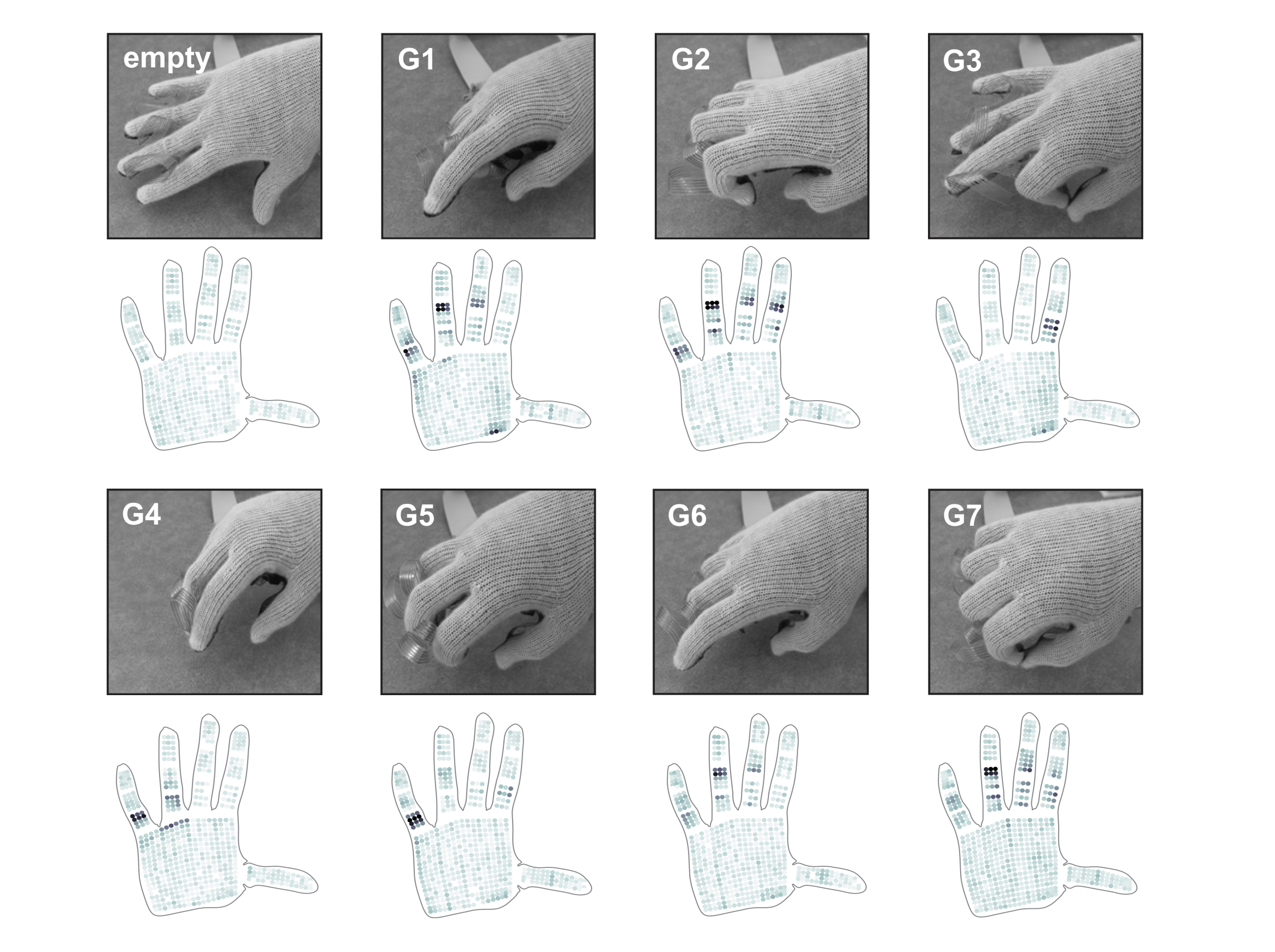

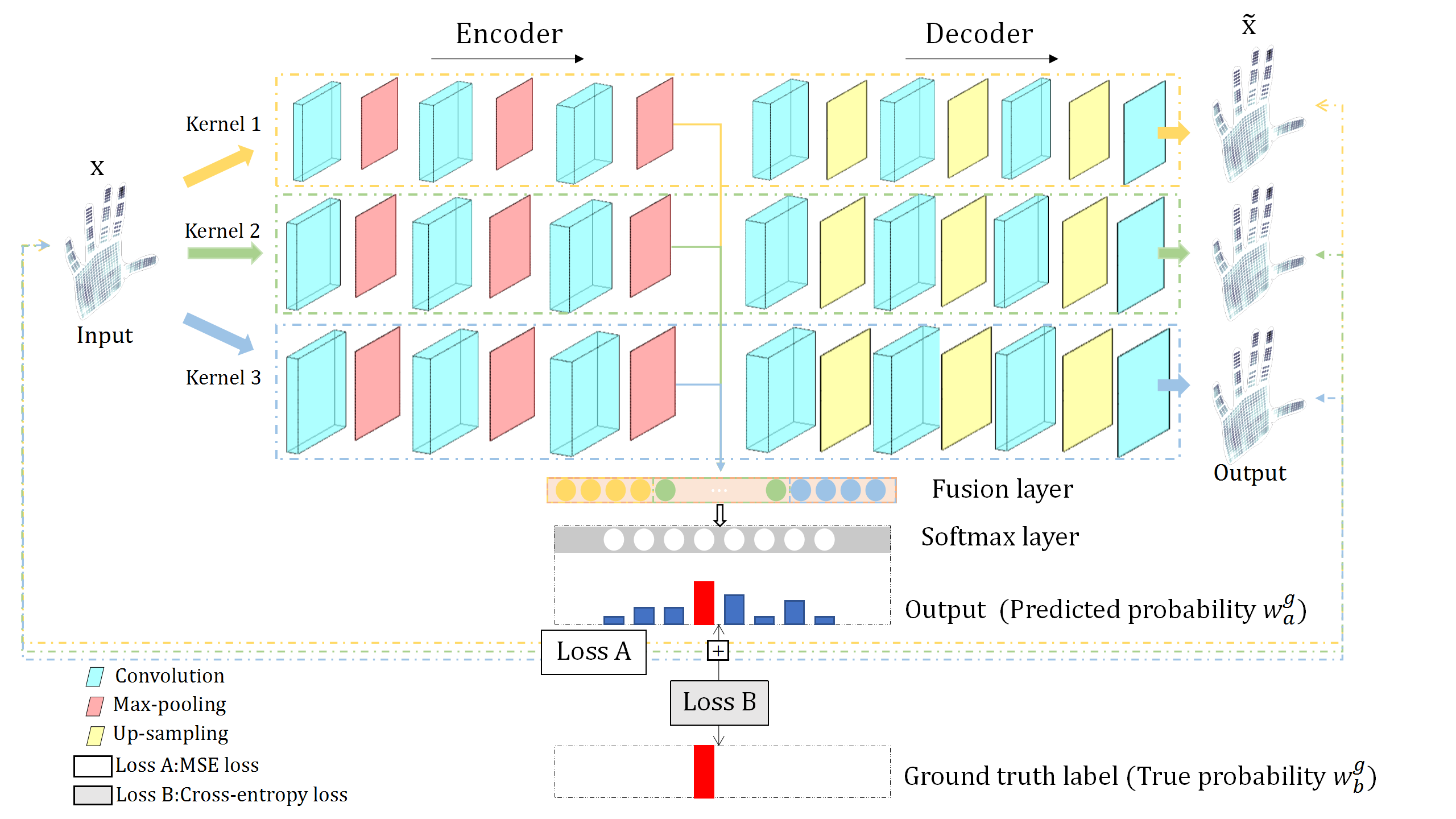

Chaoxiang Ye, Xiaoyu Li, Binhua Huang, Yuanzhe Su, Tingting Mi, Zhenning Zhou, Zhengkun Yi, Xinyu Wu CYBER, 2022 paper In this paper, we apply the Supervised Autoencoders (SAE) to improve the generalization performance. Moreover, based on the SAE, we propose the Multi-kernel-size Convolutional Supervised Autoencoders (MCSAE) to further improve the generalization performance on the limited dataset, which provides models with more structure of receptive fields and enhances the feature extraction ability of SAE. In comparison with other state-of-the-art (SOTA) models, the SAE we apply has higher gesture recognition accuracy and MCSAE can further improve the generalization performance of SAE on the sample-limited publicly available dataset. |

|

Thank Dr. Jon Barron for sharing the source code of his personal page. |